Getty Images/iStockphoto

NSX virtual switch types enhance networking capabilities

NSX-T supports both the N-VDS and VDS switches. But N-VDS requires its own network adapters, while VDS uses all network adapters from a single switch for all network types.

VMware's NSX-T Data Center supports ESXi, KVM, bare-metal servers and edge machines that handle routing and services -- all of which require a virtual Layer 2 switch in the software. With NSX-T version 3 and vSphere 7, you can now use both NSX virtual switch types -- N-VDS and VDS -- to facilitate networking in NSX-T and vSphere 7.

In a vSphere deployment, ESXi hosts come equipped with a virtual Layer 2 switch, which is either a standard or distributed switch. Standard and distributed switches have different scalability capabilities and features, but they both transport Ethernet frames to and from VMs and the physical network.

When you implement network virtualization with VMware's NSX-T Data Center, a separate software stack must handle VM traffic. In a software-defined data center (SDDC), the virtualization software controls logical switching.

If you run NSX-T version 3 with vSphere 7, you can use two different types of switches: NSX-T Virtual Distributed Switch (N-VDS) and vSphere 7 Virtual Distributed Switch (VDS). The N-VDS switch requires its own network adapters, while VDS enables you to use all four network adapters from a single switch for all network types.

Get to know the N-VDS switch

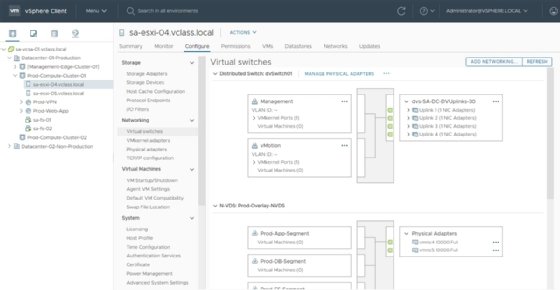

The first NSX-T switch is the N-VDS switch, which supports ESXi, KVM, bare-metal servers and edge machines. Figure 1 provides a look of an ESXi host configured with a distributed switch named dvSwitch01, as well as an NSX-T N-VDS switch named Prod-Overlay-NVDS.

Figure 1 reveals one of the disadvantages of N-VDS; each switch requires its own network adapters. In Figure 1, there are four uplinks on the distributed switch, as well as adapters vmnic4 and vmnic5 on the N-VDS switch.

This is a similar issue for KVM, bare metal-servers and edge machines because you can only use a single uplink in a switch at a time. But N-VDS is the only choice for KVM, bare-metal and edge machines, so you must come up with the most efficient design which is usually one switch per transport node. This ensures you can use all adapters on that switch.

You must create a design that matches the throughput required and maximum redundancy for failures when you use the N-VDS switch. In Figure 1, vSphere uses four adapters for regular networking purposes and only two adapters for NSX-T. If NSX-T traffic forms the majority of your workloads, two adapters for the distributed switch and four adapters for N-VDS is a more efficient split.

ESXi does offer another type of switch known as VDS, which doesn't require you to choose how your system splits network adapters.

NSX-T 3.0 with vSphere 7 offers the VDS switch

You can now use both the vSphere 7 VDS and N-VDS for NSX-T for networking purposes if you run NSX-T 3 and vSphere 7 together. NSX-T doesn't add a switch to your host when you implement VDS. Rather, NSX-T replaces the existing distributed switch with an NSX switch.

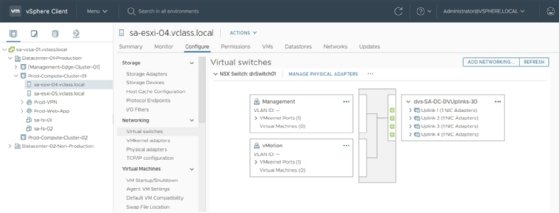

In Figure 2 you can see the same distributed switch named dvSwitch01 as shown in Figure 1, but it is now tagged as an NSX switch. The dvSwitch01 contains the same networking capabilities, such as vMotion and management.

NSX networking now works with the VDS switch, which means you can use all four network adapters from the switch for all network types. This provides enough bandwidth for all traffic types and offers the maximum redundancy capabilities. If you equip a host with more adapters, then the system can use them across all workloads.

VDS is only available when you use NSX-T 3 with vSphere 7. When you run vSphere 6 and any version of NSX-T 2.x, then N-VDS is your only choice. If you upgrade to vSphere 7 with NSX-T 3, your existing N-VDS remains operational and you can migrate your N-VDS to VDS.

Whether or not you can complete the upgrade without workload downtime depends on the number of network adapters available to your hosts. With only two adapters you should not run your workloads on a single adapter and use the other to set up the VDS switch.

It is preferable to set up a maintenance window where workloads can disconnect from the network. This enables you to use a transport profile for the entire cluster that reconfigured all hosts.

Alternatively, a host-by-host approach is also possible. You can migrate VMs between hosts, which facilitates a rolling migration through a cluster.

How to create and configure NSX switches

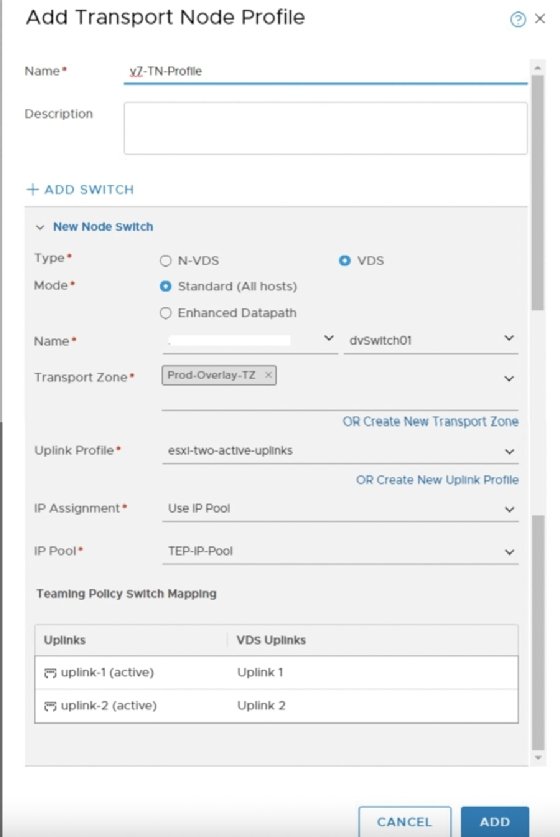

NSX-T creates both the N-VDS and VDS switches on ESXi hosts in a similar way. With four hosts in a cluster, the system can use a transport node profile. The transport node profile configures the entire definition of the switch and then implements it on all hosts in the cluster. In Figure 3, you can see an example of a VDS definition configuration with the necessary details, such as name and switch type.

N-VDS creation is a similar process. As shown in Figure 3, the transport zone is essential to N-VDS creation. The transport zone defines the scope of networking and determines where segments -- or logical switches -- are available.

In Figure 3, you can see a transport zone named Prod-Overlay-TZ. This means that the same logical switches are available to all the hosts in all clusters that use the transport zone to help define the span of your layer 2 networking.

The switch in Figure 3 uses an overlay transport zone. The other type of transport zone is for VLANs, which means VMs are directly connected to VLANs that you must define in the physical network. These switches are technically similar to regular vSphere switches.

With overlay networking the behavior of the switch changes because there's a VMkernel port named tunnel endpoint (TEP) that encapsulates traffic from VMs and transports that traffic to other transport nodes, including hypervisors. This sets NSX switches aside from regular vSphere switches because it facilitates far easier automation and scalability.

It's unlikely that VMware will remove the N-VDS switch from NSX-T in favor of the VDS with vSphere 7. This is because bare-metal servers, KVM hosts and edge machines still rely on the N-VDS switch for networking and routing.

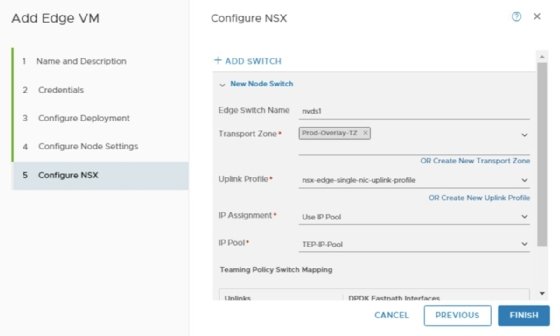

Figure 4 shows an N-VDS switch creation in action on an edge VM that also has some similar configurations as a switch on an ESXi host. The N-VDS switch is part of a transport zone and configured with a TEP address to participate in the overlay and encapsulation networking.

Because KVM, bare metal and the edge have different software stacks compared to ESXi, the N-VDS switch is the only option for the foreseeable future.